What is Log File Analysis?

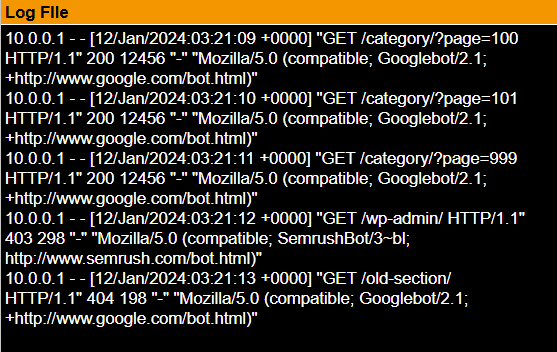

Before diving into its SEO applications, let’s start with the basics. Log files are server-generated records that document every request made to your website. Whether it's a human user loading a page or a search engine bot crawling your site, each request gets logged with details like:- IP address

- Timestamp

- User-agent (e.g., Googlebot, Bingbot, etc.)

- Requested URL

- HTTP status code

- Bytes transferred

- Referrer

Why Log File Analysis Matters for SEO

While SEO tools like Google Search Console or site crawlers provide inferred or sampled data, log files deliver 100% accurate, real world information about how bots actually crawl your site. Here’s why that matters:See Exactly What Googlebot Sees

Search engines determine your site's indexability and ranking potential based on how effectively they can crawl it. Through log file analysis, you can observe:- Which URLs Googlebot is crawling

- How frequently it's visiting

- Whether it’s wasting crawl budget on irrelevant pages (like parameterised URLs or admin sections)

- If important pages are being ignored

Identify Crawl Budget Waste

Google allocates a finite crawl budget per domain, especially for large websites. If bots are spending their time crawling thousands of duplicate, redirecting, or non-canonical URLs, they’re not focusing on your priority content. With log file analysis, technical teams can isolate patterns of crawl inefficiency:- Excessive crawling of faceted navigation pages

- Indexing of filtered/sorted product pages

- Infinite URL loops caused by bad CMS configurations

Detect Crawl Errors and Server Issues

While you might spot crawl errors in Search Console, log files show them in real-time and with more detail. For instance:- 5xx errors may indicate server misconfigurations

- Frequent 404s can highlight broken internal links or deleted content

- 301 chains (multiple redirects) can degrade crawl efficiency and dilute link equity

How to Perform Log File Analysis for SEO

Performing log file analysis involves 5 key steps:Step 1: Obtain the Log Files

Before any analysis can begin, you need access to the raw server logs.What Are You Looking For?

You’ll want the access logs, not error logs or database logs. Access logs record every HTTP request made to your site. Typical filenames:- access.log

- access_log.YYYYMMDD.gz (daily compressed logs)

- access.log.1 (rotated logs)

- In cloud setups: log streams from AWS CloudFront, Google Cloud Logging, Azure Monitor, etc.

Where Can You Find Them?

- Shared Hosting / cPanel: Look in “Raw Access Logs” or “Metrics” under your control panel.

- VPS / Dedicated Servers: Use SSH or FTP to access /var/log/httpd/ or /var/log/nginx/.

- Cloud Hosts: Check logging services (e.g. CloudWatch for AWS, Stackdriver for GCP).

- Content Delivery Networks (CDNs): If you’re using a CDN like Cloudflare, Fastly, or Akamai, request logs separately, they may intercept some bot traffic before it reaches your origin server.

Important Tips:

- Ensure you have log data covering at least 30 days for accurate trend analysis.

- Check the retention policy, some servers only store logs for 7 days unless configured otherwise.

- Use secure access methods (SFTP, SSH) and ensure compliance with privacy laws like GDPR. Avoid storing or sharing IP addresses unless anonymised where necessary.

Step 2: Filter for Search Engine Bots

Raw logs contain every single request, from users, bots, scrapers, and even internal tools. The first step in analysis is to separate genuine search engine bots from all other traffic.Identify Bot Traffic

Look at the User-Agent string in the logs. Common genuine bots include: Bot Name User-Agent Substring Googlebot (desktop) Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html) Googlebot (mobile) Googlebot-Mobile or Googlebot/Smartphone Bingbot bingbot YandexBot YandexBot Baiduspider Baiduspider DuckDuckBot DuckDuckBotValidate Bots to Avoid Fakes

Some bots spoof these user-agents. Confirm authenticity by:- Reverse DNS lookup: Verify that the IP address resolves to a known Googlebot/Bingbot domain.

- Use IP lists from search engines: Google publishes theirs here.

- Dedicated tools: Screaming Frog Log File Analyser and Botify offer built-in bot validation.

Step 3: Parse and Clean the Data

Log files are not user-friendly by default, they need to be parsed into structured data for analysis.Parsing Tools

You can use:- Excel or Google Sheets (for small logs): Import .csv or .txt, split text to columns using delimiters like space or quotes.

- Python: Ideal for handling large logs. Use libraries like pandas, regex, or tools like Apache Log Parser.

- Log Analysis Software:

- Screaming Frog Log File Analyser – user-friendly, SEO-focused

- OnCrawl / Botify – cloud-based, enterprise-grade

- ELK Stack (Elasticsearch, Logstash, Kibana) – custom, scalable analysis for big sites

- AWStats / GoAccess – traditional log analysis tools

Fields You’ll Need

Parse at minimum:- Timestamp

- IP address

- User-agent

- Requested URL

- HTTP status code

- Referrer (optional but helpful)

- Bytes served (for bot bandwidth analysis)

Cleaning the Data

Once parsed:- Remove noise: Filter out favicon requests, .css, .js, .png, and bot spoofers.

- Normalise URLs: Remove tracking parameters (?utm_source=, ?ref=, etc.) unless intentionally analysed.

- Group similar paths: E.g., all /product/ URLs, or paginated /blog/page/ URLs.

Step 4: Analyse the Data

Now that your data is clean, the real insights begin. Here are the most critical things to look for:Crawl Frequency

How often do search engine bots hit your pages?- Sort by URL and count hits per day.

- Highlight high-value pages (e.g., top-selling products, pillar blog posts).

- Spot pages that should be crawled more but aren’t.

Pages That Have Never Been Crawled

Cross-reference log data with your full URL inventory (e.g., from Screaming Frog or your sitemap).- If a page is never crawled, it may be orphaned, blocked, or incorrectly canonicalised.

Crawl Depth Analysis

Group URLs by click depth from the homepage (1, 2, 3, etc.). Do bots stop crawling at a certain depth?- Bots may not reach deep or poorly linked content.

- Adjust internal linking or flatten site architecture if needed.

Crawl Distribution by Status Code

Look at how many times bots encounter various HTTP status codes: Status Code Meaning SEO Impact 200 OK Ideal 301/302 Redirect Acceptable, but avoid chains 404 Not Found Wasted crawl budget, broken links 500+ Server Error Critical issue, may block indexingBandwidth Use by Bots

Log files often include the number of bytes served. Track which bots consume the most bandwidth.- Googlebot loading large, image-heavy pages may be straining your server.

- Consider optimising media or blocking bots from non-essential assets.

Unusual Patterns

Set up filters for anomalies such as:- Spikes in crawl activity

- Bots requesting strange or outdated URLs

- High volume of 404s or 5xxs in a short period

- Sudden drop in crawl rates (possible site issues or penalties)

Step 5: Take Action on Insights

Insights are only valuable when they lead to measurable improvements. Based on your findings, here’s how you can respond:Fix Crawl Wastage

- Add disallow directives in robots.txt for non-strategic areas (e.g., /search, /cart, /filter?price=).

- Use canonical tags to consolidate similar or duplicate pages.

- Remove legacy or broken URLs from sitemaps.

Improve Important Pages’ Visibility

- Add internal links to under-crawled pages from top-level or frequently crawled pages.

- Ensure high-value pages are linked from XML sitemaps.

- Submit critical URLs for indexing via Google Search Console if necessary.

Resolve Errors

- Fix or remove URLs returning 404s.

- Address server misconfigurations causing 500 errors.

- Eliminate redirect chains by updating links to point directly to the final URL.

Optimise for Mobile Bots

- Ensure responsive design and fast load times for mobile users.

- Check mobile-specific content for crawlability (no client-side JS blocking bots).

- Use <link rel="alternate"> and <link rel="canonical"> properly in mobile scenarios.

Monitor Ongoing Changes

- Set up a monthly crawl report dashboard using tools like Kibana or Looker Studio (via BigQuery).

- Use anomaly detection tools to alert you when crawl patterns shift unexpectedly.

Advanced Log File Analysis Techniques

For mature SEO teams or enterprise-level websites, the basic use cases of log file analysis are just the beginning. Let’s explore some more sophisticated applications that can take your SEO strategy to the next level.Crawl Budget Forecasting

By analysing historical crawl activity, technical teams can predict and optimise future crawl trends. If a new product section is launching or a large number of legacy URLs are being redirected, you can model how Googlebot might react. Use case : Let’s say your site is about to deploy 10,000 new category pages. Using log data, you can assess how long it took for previous launches to be crawled and indexed, helping you estimate timelines and adjust your rollout strategy accordingly.Comparing Bot vs User Behaviour

Pairing log file data with analytics platforms (like GA4 or Matomo) allows you to compare bot traffic vs human traffic. This comparison can uncover discrepancies such as:- High user interest in pages that Googlebot rarely crawls

- Googlebot crawling URLs that receive no traffic, possibly due to thin content or duplication

Segmenting by Bot Type and Device

Modern SEO isn’t just about “Googlebot”; it’s about which Googlebot. With mobile-first indexing, the difference between Googlebot-Mobile and Googlebot-Desktop is critical. Log file analysis helps you:- Validate mobile-first indexing effectiveness

- Ensure responsive content delivery

- Identify mobile-specific errors (e.g. JavaScript rendering failures on mobile only)

Page Importance Scoring

By measuring crawl frequency and combining it with URL depth and backlink data, you can assign a "crawl priority score" to different pages. This helps identify content that:- Google sees as high priority (frequently crawled)

- Users care about (high traffic)

- You want to promote (strategic business pages)

Integrating Log File Analysis into Workflows

It’s one thing to perform an occasional log audit, but true SEO value lies in making log file analysis part of your regular operations.Monthly or Quarterly Reviews

Schedule monthly log audits to catch crawl anomalies early, particularly if:- You publish new content frequently

- You operate in a seasonal industry (e.g. travel, fashion)

- You’ve undergone structural changes (like migrations or redesigns)

Automated Monitoring & Alerts

Using tools like the ELK Stack, DataDog, or custom scripts, you can set up real-time alerts for anomalies such as:- Sudden spikes in 404s

- Bots accessing unexpected URL patterns

- Drop in crawl activity across key folders

DevOps Collaboration

Log file analysis often falls between SEO and infrastructure. By partnering with DevOps or Site Reliability Engineering (SRE) teams, SEOs can ensure:- Log retention policies meet your analysis needs

- CDN logs (e.g. Cloudflare, Akamai) are included

- Server configurations don’t block or misreport bot activity