What Is JavaScript SEO?

JavaScript SEO refers to the process of optimising websites that rely heavily on JavaScript so that search engines can effectively crawl, render, and index their content. Unlike traditional HTML pages, where content is immediately visible to crawlers, JavaScript-powered websites often load or modify content dynamically, meaning the information users see in the browser might not be immediately accessible to search engine bots. JavaScript SEO focuses on closing this gap by ensuring that the critical content and links on your site are visible and understandable to search engines, ultimately supporting your site’s organic visibility.Why JavaScript Poses a Challenge for SEO

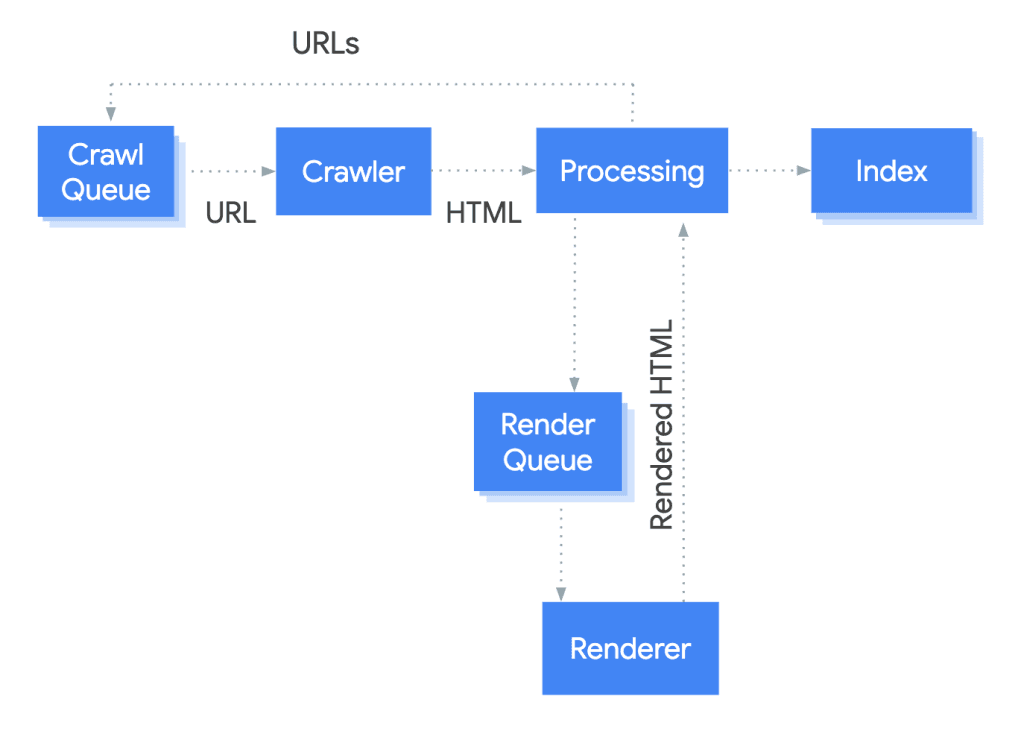

JavaScript isn’t inherently bad for SEO, but it does introduce complexity. To understand why, it’s helpful to break down how Google typically handles a web page:- Crawling: Googlebot discovers the URL and downloads the initial HTML.

- Rendering: Google needs to execute JavaScript to fully understand the content.

- Indexing: Once rendered, the visible content is evaluated and potentially added to the index.

How Google Renders JavaScript Content

Over the years, Google has improved significantly in its ability to render JavaScript. Googlebot now uses an evergreen version of Chromium, meaning it can render modern JS frameworks like React, Angular, or Vue.js. However, this process is not instantaneous. Here’s how rendering typically works:Initial Crawl

Googlebot fetches the raw HTML and detects that JavaScript needs to be executed. When Googlebot first encounters a page, it downloads the initial HTML. At this stage, the bot quickly scans the code to determine whether JavaScript is required to load additional content. If the core content isn’t present in the static HTML, Googlebot flags the page for rendering. This first crawl is efficient but limited, it doesn’t execute scripts yet, which means any important information loaded via JavaScript remains temporarily invisible to the crawler.Rendering Queue

The page is added to a rendering queue, where Google executes the JS and waits for the DOM to be fully populated. After the initial crawl, the page enters a rendering queue where Googlebot executes the JavaScript to simulate how a browser would display the page. This step requires significant resources, so it doesn’t happen instantly, especially on large or complex websites. Google waits for the Document Object Model (DOM) to fully load before it can understand the complete structure and content of the page. If the JavaScript takes too long to load or fails, rendering might be incomplete.

Post-Render Crawl

Once rendering is complete, Google extracts the visible content and follows links for indexing. Once Googlebot finishes rendering the page, it examines the final output, the content and links that appear in the browser after scripts are executed. At this point, the bot determines what to index and which internal or external links to follow. This step is essential for ensuring JavaScript-loaded text and navigation elements are included in search results. If rendering is successful, your content becomes eligible for indexing just like a traditional HTML page. Although this works in many cases, problems arise when JavaScript fails to load due to errors, delays, or blocked resources. These issues can prevent Google from ever seeing the page content, which negatively impacts your search performance.Key Challenges in JavaScript SEO

While Google has made significant strides in rendering JavaScript, it’s far from foolproof. Websites that rely heavily on client-side scripting still face a number of technical hurdles that can affect how content is crawled, indexed, and ultimately ranked.Delayed or Incomplete Indexing

Because Google renders JavaScript in a second wave, there’s a delay between the time a page is crawled and when it’s fully understood. This delay can slow down how quickly your content appears in search results, or prevent it from being indexed at all if rendering fails.Broken or Non-Discoverable Links

Links added to the DOM using JavaScript (especially via onclick events or non-anchor elements) might not be seen or followed by Googlebot. This can limit crawl depth and internal linking, two important aspects of SEO.Blocked Resources

JS rendering requires access to scripts, APIs, and third-party services. If your robots.txt file blocks key resources like JS files, CSS, or images, Googlebot might not be able to fully render the page, resulting in incomplete indexing.Content Mismatch

Sometimes, the content seen by users and the content seen by Google differs due to client-side rendering issues or race conditions. This can result in poor rankings or even algorithmic penalties if search engines perceive it as cloaking.JavaScript Rendering Strategies: SSR, CSR, and More

To address these challenges, developers and SEOs need to adopt the right rendering strategy for their website. The main options include:Client-Side Rendering (CSR)

In CSR, the browser executes JavaScript to build the page. While this provides a fast, app-like experience for users, it depends heavily on search engines being able to render the content. It poses the highest risk for SEO if not handled carefully. Best for: Web apps with low reliance on organic search.Server-Side Rendering (SSR)

With SSR, the server generates a fully-rendered HTML page before sending it to the browser. This ensures that content is visible to both users and search engines right away, improving crawlability and indexability. Best for: Content-heavy sites that rely on SEO for traffic.Static Rendering / Pre-rendering

In static rendering, content is pre-built into HTML at build time using tools like Gatsby or Nuxt. This combines the benefits of JS-based frameworks with SEO-friendly HTML output. Best for: Blogs, marketing sites, and documentation that don't change frequently.JavaScript SEO Best Practices

To make your JavaScript-powered site search-friendly, follow these best practices:Test Your Pages with Google Tools

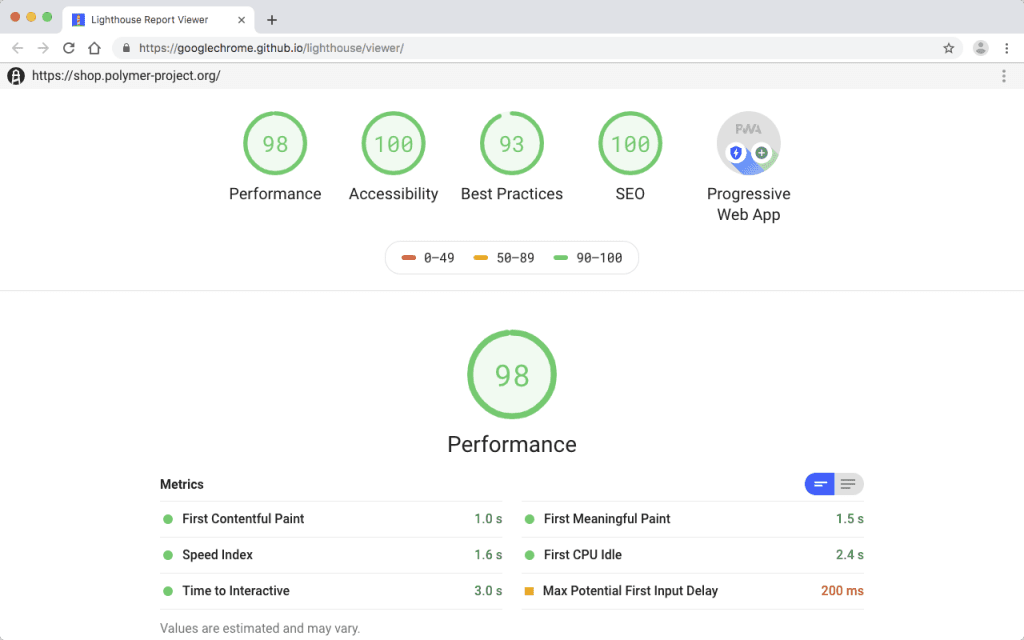

Use Google’s URL Inspection Tool to see how your JS content is rendered. You can also use tools like Lighthouse or Rendertron to preview what Google sees.