What Is Crawl Budget?

Crawl budget refers to the number of pages a search engine, such as Google, is willing and able to crawl on your site within a given timeframe. It’s essentially a balancing act between two key elements:- Crawl Rate Limit: How frequently Googlebot can access your pages without overloading your server.

- Crawl Demand: How much Google wants to crawl your site, based on factors like page popularity, freshness, and historical crawling behaviour.

Why Crawl Budget Matters for SEO

Crawl budget isn’t something that should keep every website owner up at night. For smaller sites (with fewer than a few thousand URLs), Google typically does a good job of crawling everything that matters. However, for larger websites or platforms with complex URL structures, crawl budget optimisation can be a game-changer. Here’s why crawl budget matters:Indexing Efficiency

If search engines don’t crawl a page, they can’t index it, and if it’s not indexed, it simply won’t appear in search results. For larger websites, it’s not uncommon for some pages to be missed entirely during crawling, especially if they’re buried deep in the site structure or aren’t linked properly. A well-managed crawl budget ensures that important pages are crawled regularly and thoroughly, allowing them to be indexed and shown to potential users when relevant queries are made.Prioritising Key Pages

Not all pages on your website are created equal. Product pages, cornerstone content, and landing pages typically offer the highest value to both users and your business goals. If your crawl budget is being consumed by unimportant or low-priority pages, like outdated blog tags or search result URLs, your key content might be overlooked or crawled less frequently. Optimising your crawl budget helps ensure that Googlebot allocates its resources to your most strategically valuable content.Managing Duplicate and Low-Value Pages

Many websites unintentionally create hundreds of duplicate or near-duplicate pages due to URL parameters, pagination, or CMS quirks. These pages can soak up a disproportionate amount of your crawl budget without adding any real SEO benefit. Left unchecked, this reduces the chances that Googlebot will reach your newer, better-optimised pages. By identifying and removing or deindexing low-value pages, you make space in the crawl budget for content that truly deserves to be seen.Keeping Content Fresh

Search engines aim to serve users the most up-to-date and relevant content. But if your crawl budget is stretched thin, Googlebot may revisit important pages less frequently, leading to delays in reflecting content changes in search results. This is particularly problematic for websites with frequent updates, such as news sites, event-driven content, or seasonal products. Ensuring that Google can crawl your high-value pages regularly helps keep your search presence timely and accurate.How to Check Your Crawl Budget

Google doesn’t give you a single number labelled “crawl budget”, but you can piece together the picture using data from Google Search Console and server logs.1. Google Search Console (GSC)

Head to the Settings section in GSC and look for the Crawl Stats report. This section shows three key metrics:- Total crawl requests: This shows how many requests Googlebot made to your site each day. A higher number indicates more pages being crawled.

- Total download size: This represents how much data Googlebot has downloaded. Sudden spikes might point to large media files or bloated page structures.

- Average response time: If your server response times are consistently high, Google may reduce its crawling rate to avoid overloading your site.

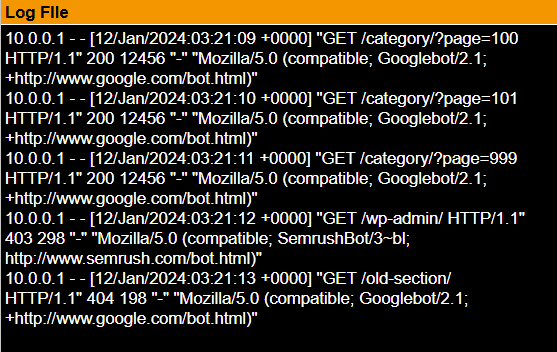

2. Log File Analysis

For a more granular and accurate view of your crawl budget, server log file analysis is essential. Unlike GSC, which gives summary data, log files show exactly which URLs are being requested, when, and by whom. Every time Googlebot crawls your website, it leaves a footprint in your server logs. By analysing this data, you can see:- Which URLs are actually being crawled: This helps you verify whether your important pages are getting sufficient attention.

- Crawl frequency: See how often bots revisit key pages and how recently they were last crawled.

- Wasted crawl budget: Identify if bots are repeatedly accessing irrelevant pages such as faceted URLs, filtered search results, or duplicate paths.

- Bot types and IPs: Confirm whether the traffic is coming from genuine Googlebot, Bingbot, or other crawlers.

- Crawl prioritisation: Compare how crawl frequency varies between high-value and low-value sections of your site.

Factors That Influence Crawl Budget

Several factors influence how Google assigns and spends crawl budget on your site. These include:Site Speed and Server Performance

If your website loads slowly or returns errors, Googlebot may crawl fewer pages to avoid overloading your server. Fast, responsive websites tend to enjoy more generous crawling.Site Structure and Internal Linking

Well-organised websites with clear internal links make it easier for bots to discover important content. A shallow click depth (ideally three clicks or fewer to reach any page) improves crawl efficiency.Duplicate or Low-Quality Content

Sites filled with duplicate or thin pages may see crawl budget wasted on URLs that don’t contribute to SEO goals. Clean, purposeful content makes better use of limited crawling resources.Redirect Chains and Errors

Broken links, redirect loops, and long redirect chains can bog down crawling. Googlebot may give up on indexing certain sections of your site if it encounters too many errors.URL Parameters and Dynamic URLs

E-commerce and CMS-driven websites often generate a flood of near-identical pages via URL parameters. Without control, these can eat up crawl budget fast.Robots.txt and Noindex Tags

Proper use of robots.txt and noindex can steer bots away from pages you don’t want indexed, but misuse can accidentally block pages you do want crawled. Strategic exclusion is key.How to Optimise Crawl Budget

Optimising crawl budget is about making it easier for search engines to find, prioritise, and revisit your most valuable content. Here’s how to do it effectively:Audit and Prune Low-Value Pages

Identify pages that don’t contribute to search performance (e.g. duplicate tag pages, outdated blog posts, thin category pages) by conducting site audit . After that, either consolidate, redirect, or noindex them.Fix Crawl Errors Promptly

Regularly monitor GSC for crawl errors such as 404s, server errors, and redirect issues. Fix these as soon as possible to prevent wasted crawling effort.Improve Site Speed

Use SEO tools like Google PageSpeed Insights or Lighthouse to identify bottlenecks in performance. Faster-loading pages consume fewer resources and encourage deeper crawling.Use Canonical Tags Correctly

Avoid duplicate content issues by clearly signalling the preferred version of a page using canonical tags. This helps Google concentrate its crawl budget where it matters.Limit Crawl Traps

If your website generates endless URL variations (e.g. filters, sorts, search queries), use robots.txt and parameter handling in GSC to block or consolidate them.Strengthen Internal Linking

Ensure your key pages are well linked from menus, footers, sitemaps, and within content. A well-linked page is more likely to be discovered and crawled often.Submit XML Sitemaps

A clean, accurate sitemap helps Googlebot locate important pages. Split large sitemaps by page type or update frequency to give bots useful crawl hints.Who Should Worry About Crawl Budget?

Not every site needs to worry about crawl budget in great depth. However, crawl budget becomes a more pressing issue if:- Your site has over 5,000–10,000 URLs

- Your site updates frequently with new or revised content

- You use a CMS that generates dynamic or faceted URLs

- Your crawl stats show frequent errors or declining crawl rates

- Your key pages are not being indexed consistently